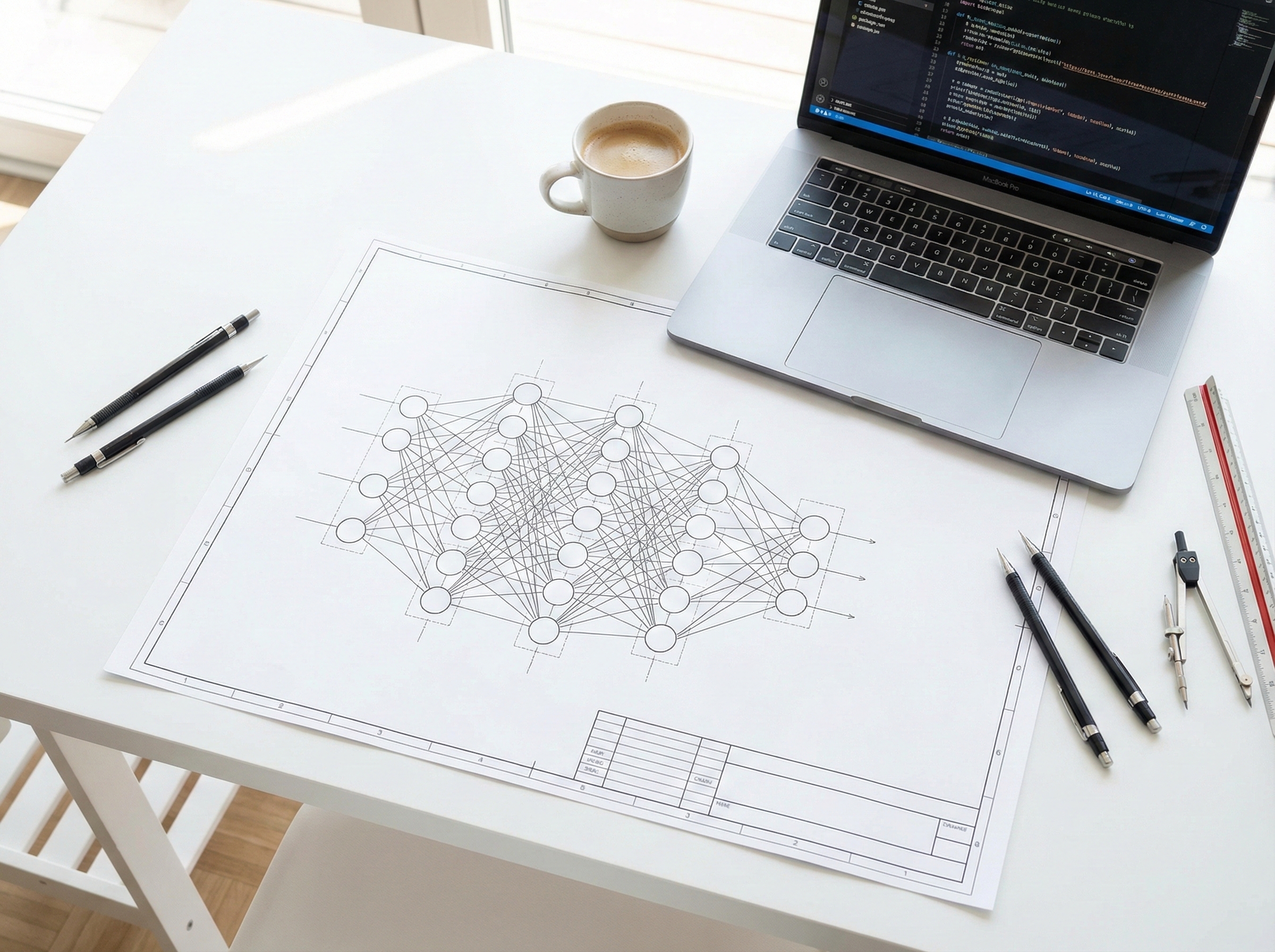

Beyond the Chatbot: Engineering Production-Ready LLM Systems

The Great Optimization

We've entered the era of efficiency. The "bigger is better" parameter war is over. In 2025, LLM engineering is obsessed with small, sparse, and specialized models. Why run a 70B parameter model when a fine-tuned 7B model can outperform it on your specific domain for 1/10th the cost?

RAG 2.0: The Knowledge Graph Revolution

Retrieval-Augmented Generation (RAG) has evolved. Simple vector search is no longer enough. We are now seeing "GraphRAG"—combining vector embeddings with knowledge graphs to understand the relationships between data points, not just their semantic similarity. This drastically reduces hallucinations and improves complex reasoning.

- Multimodal RAG: Ingesting PDFs, charts, videos, and audio into a unified knowledge base.

- Quantization: Deploying 4-bit and 8-bit quantized models to run on consumer hardware without losing reasoning capability.

- Evaluation pipelines: "LLM-as-a-Judge" frameworks to automatically test and regression-proof model updates.

Engineering for Reliability

The difference between a demo and a product is reliability. Production LLM engineering today is 80% guardrails, evals, and data pipelines, and only 20% prompt engineering. It's about building systems that fail gracefully and recover automatically.